When Half of the Chatter Isn’t Even Human

I don’t like making posts that sound alarmist, but this one matters.

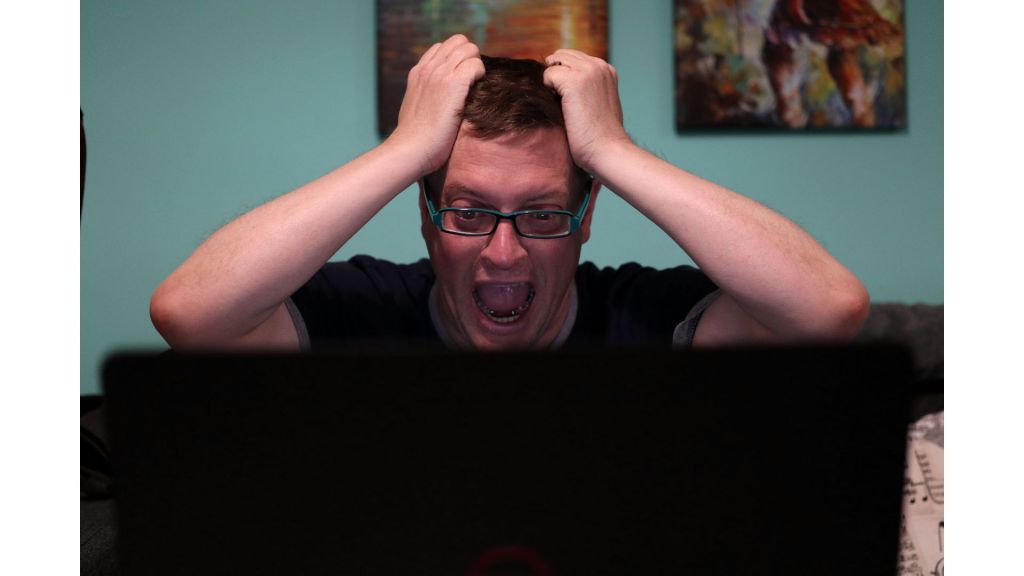

Estimates suggest that up to half of internet traffic comes from automated accounts. Combined with engagement-based algorithms, this means users are often presented with content engineered to elicit strong emotional responses.

How the Internet Learned Us – and Then the Bots Took Over

When the internet began, it was simple. You searched for information; it gave you results. No manipulation. No emotion. Just code and curiosity.

Then came the era of connection – social media. It started as a way to stay in touch with family, share photos, and build communities. But what we didn’t realize was that every tap, comment, and scroll became data.

The internet began learning, not about facts, but about us.

It learned what we fear, what makes us laugh, what makes us angry, and what keeps us scrolling.

But the internet itself doesn’t argue, provoke, or taunt. Bots do.

The Automated Web: Where Bots Rule

In 2024, more than 50% of all web traffic was automated – and about two-thirds of that came from “bad bots.”

Meaning: half of what you see online isn’t human at all and its meant to negatively impact you.

How They Work:

- Data collection: Every click, like, hover, scroll, and share teaches the system your triggers.

- Algorithmic learning: Platforms predict what will evoke your strongest reaction.

- Bot amplification: Once they know your emotional weak spots, bots replicate content that feeds them.

- Micro-targeting: Feeds feel personal, but they’re psychological traps.

Before you know it, you’ve spent hours arguing with a stranger named @truthseeker_97 in a comment thread, unaware it might not even be a person.

The Rise of the Provokers

In the mid-2010s, something shifted. Social media stopped being primarily human.

The majority of the “engagement” we see today doesn’t come from real people, it comes from automated bots programmed to provoke emotion.

At first, they were simple spam accounts. Then they evolved, powered by AI, able to mimic language, tone, and timing so convincingly that they feel human.

Now they run political arguments, outrage threads, viral fights – anything that keeps humans talking, sharing, and dividing. They don’t get tired. They don’t get hurt. And they don’t care who they destroy. Their only job: keep you emotionally engaged.

From Information to Manipulation – A Timeline

The Origins of Bots (1960s‒1980s)

- ELIZA (1966): MIT’s first chatbot mimicking a therapist.

- IRC Bots (1988): Automated chat moderators and early spammers.

- Jabberwacky (1988): Attempted playful, human-like conversation.

Early Internet Bots (1990s)

- Web Crawlers like WebCrawler (1994) and Googlebot (1996) indexed the web.

- ALICE (1995): Expanded natural language conversation.

- Botnets (1999): Early malicious networks used to hack and steal data.

The Dot-Com Era (2000s)

- Mafiaboy (2000): Teen hacker used a botnet to take down major sites.

- SmarterChild (2001): Early chat assistant on AOL/MSN.

- Storm Botnet (2007): 50 million infected computers used for spam and fraud.

- Cleverbot (2008): Learned from human input – a step toward adaptive AI.

Modern Bots (2010s–Today)

- Siri (2011), Alexa (2014), Google Assistant (2016): AI assistants become mainstream.

- Mirai (2016): Hijacked smart devices for massive cyberattacks.

- ChatGPT (2022): Marked a leap in human-like AI communication.

- Social Media Bots: Manipulate public opinion and emotional discourse.

- By 2024, bot traffic officially surpassed human traffic on the internet.

Why Bots Matter – The Good and the Bad

Not all bots are harmful. In fact, many make the internet usable.

Good Bots: The Helpers

- Search bots (like Googlebot) continuously index websites, helping you find what you need in seconds.

- Monitoring bots track website performance, detect downtime, and alert companies before crashes.

- Customer service bots help users troubleshoot, book appointments, or find information 24/7.

- Accessibility bots convert text to voice or help disabled users navigate websites.

- Data aggregation bots gather public information (like weather updates or stock prices) that power apps we use daily.

These “good bots” form the backbone of the internet’s efficiency, invisible assistants that make the web work. That’s why understanding bots matters. Because recognizing what’s real, and what’s engineered, is now a survival skill in the digital age.

The Anxious Generation: When Digital Became Dangerous

Jonathan Haidt’s The Anxious Generation (2024) outlines how the rise of smartphones and social media has fundamentally rewired us – especially kids.

- Since around 2010, rates of teen anxiety, depression, and self-harm have skyrocketed worldwide.

- Children shifted from discover mode (curiosity, independence, exploration) to defend mode (fear, reaction, constant alert).

- The digital world floods developing brains with dopamine highs and social comparison lows, leaving them anxious and disconnected.

- Haidt warns that social media turns our minds – and attention spans – into products.

And this isn’t just about stress. People are losing their lives. Suicide rates among teens have sharply increased.

Adults are breaking relationships, withdrawing from family, and spiraling into digital anxiety and isolation.

This isn’t random. It’s the result of systems – and bots – trained to exploit human emotion.

The Matrix Moment & the Dead Internet

Some researchers have proposed what’s called the Dead Internet Theory: a large portion of online content is algorithmically generated, leaving fewer genuine human interactions than it appears.

In this environment, users react to pixels, patterns, and programmed emotion – often believing the interactions are real. It’s a Matrix-like moment: not one where machines enslave us physically, but where algorithms and bots shape attention, amplify conflict, and disconnect us emotionally.

The result is subtle but profound. Technology doesn’t destroy us directly, but it can influence how we think, feel, and interact, sometimes leaving genuine human connection diminished.

The Fake Safe Space

Algorithms and bots are designed to keep us comfortable, not curious. They build what researchers call filter bubbles and echo chambers, digital environments that surround us with content matching our beliefs while filtering out anything that challenges them. It feels like a safe space, but it’s manufactured. Over time, we stop hearing new perspectives and start mistaking repetition for truth. Our minds are meant to think critically, not just react or herd-share. Real understanding begins when we step outside the algorithm’s comfort zone and engage with ideas that make us pause, reflect, and grow, and no, everything doesn’t have to be a debate. Let your mind think for itself.

Reclaiming Reality: A Digital Detox Moment

You can’t eliminate bots, but you can take back your time and attention.

Consider this a moment to pull the plug: step away from screens, social media, and the constant stream of algorithm-driven content.

- Disconnect completely for a bit. Let your mind reset without the influence of automated traffic.

- Engage in the real world. Talk with friends or family face-to-face. Go outside and outdoors. Notice what’s right around you, not in the feed.

- Reflect, don’t react. Observe how it feels to be free from digital triggers.

- Reconnect with purpose. Let real experiences, not programmed posts, guide your thoughts and emotions.

A short break can recalibrate attention, reduce stress, and remind us what genuine connection feels like, before the algorithms and bots pull you back in.

Final Thought: Attention Is the Battleground

We’re caught in a feedback loop:

The internet watches us → learns what stirs us → bots amplify that → our emotions fuel the cycle.

But we do not have to stay caught. We can pause. We can see clearly. We can take back our focus.

Because when you’re scrolling, reacting, feeling…

You’re not just consuming content.

You’re being consumed.

Take back your attention. Reclaim your freedom. Live real.

Some links in this post may be affiliate links. This means we may earn a small commission if you make a purchase through these links, at no additional cost to you. We only share products or services we personally use or believe are valuable.

Leave a comment